What data challenge will you tackle this new year? One year working with partners at Snowflake

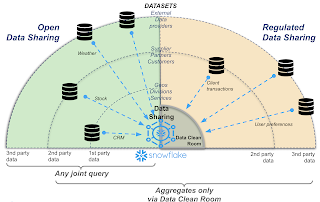

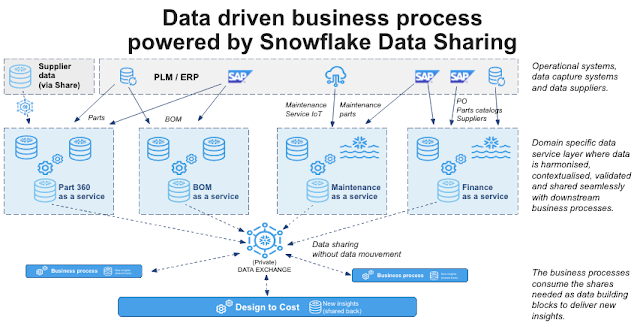

With the new year comes the retrospective. This is also the opportunity to give some visibility on what partner sales engineer role means, we have currently 2 positions open in Europe ( UK and Germany ) and a lot more to come ! Don't hesitate to contact me to know more. 2021, the year of Data Mesh Data Mesh has been a trending architecture topic for several months. It's now in most of the discussions we have with large accounts (with good data maturity). Of course it resonates very well with Snowflake Data Cloud and the ability to seamlessly share data between Snowflake accounts ("Domains") wherever they are but also with all elastic and scalable resources that can be allocated to these domains. Domain centric architecture Actually, a year ago and before I knew about Data Mesh architecture principles, I wrote a blog " Domain centric architecture : Data driven business process powered by Snowflake Data Sharing ". What a mistake! I should have call it ...