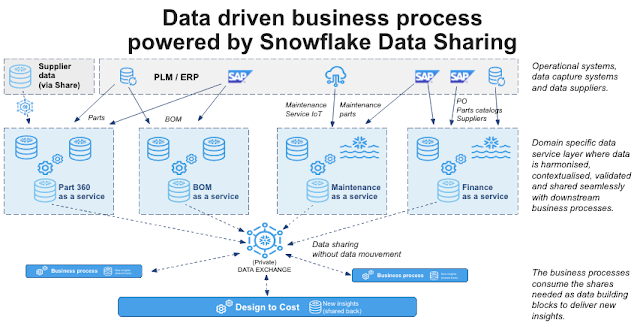

Domain centric architecture : Data driven business process powered by Snowflake Data Sharing

A few month ago I was discussing with the Data Management director of a large manufacturer. We were talking about the architecture principles of having a domain centric data service layer which would feed business processes with harmonised, contextualised and validated data.

In each domain, the data would be owned by domain experts who have extensive knowledge of the data requirements, sources characteristics and usage. Each domain then serves the data "as a service" for it be be consumed and joined with other domains by business processes.

This vision provides the ability to quickly drive new use cases and value by leveraging preexisting domains (and related effort) without reinventing the overall data stack in the new "product". However the realisation at scale faces multiple challenges and barriers: currently the data is usually moved multiple times to be prepared and then moved sometimes to each individual product that requires this data, it creates delays, governance and quality issues, and of course infrastructure and operational costs. API could be an alternative but do not provide the ability to join multiple datasets from different domains at scale.

Breaking the barriers: The Data Cloud

Here comes Snowflake Data Cloud. The Data Cloud is a network connecting 1000s of clients where data can be shared without moving it. The fondamental benefit is the data exists once at the producer, it will be consumed from there by consumers who will leverage Snowflake compute layer to query the data and join it with their own datasets but also potentially datasets from other producers.

What is the impact :

- Immediate time to value

- Data always fresh (data as-is from the producer)

- Data always in producer quality (no deprecation during export/reprocessing)

- End-to-end governance of the data

- No data export, ingest, processing, validation == Faster and cheaper

This is really a game changer to make the domain centric architecture happen. We usually think about Data Sharing in the client/supplier relationship. It can indeed be used to share data with "the extended enterprise" but it can also be used between entities of a single client / group.

Now imagine that each domain of a company has its own Snowflake account where it consolidates multiples sources and designs datasets to be served "as a service" to client business processes. The global organisation can apply common governance and security rules to all the domains but they remain managed by the domain experts.

In the exemple above, the Design to Cost business process can consume live data from parts, BOM and finance domains using Shares. Snowflake allows to query and join these Shares and all this at a massive scale.

In order to deliver the service it's then possible to leverage all the capabilities of Snowflake as running multiple workloads on these datasets concurrently: enrich the data, train machine learning models and make predictions, deliver exploration and visualisation experience to end-users etc. etc.